In short: Docker is a platform and toolkit to develop applications that consist of multiple small containerized components that can be started, updated and replicated independently from each other.

How it came to be

Factor 1 – Monoliths are hard to handle

Humans develop software since the early days of computers. Programming languages have evolved a lot since then, and software projects grew bigger and bigger. Some companies had such large “monolith” systems running that they could only deploy updates once or twice a year. This was really bad for keeping pace with other companies and competition.

Factor 2 – The cloud

We all know that “the cloud” is just someone else’s computer. We should not be fooled by big companies such as Google Cloud, Amazon Web Services and Microsoft Azure which tell us that cloud is normal. Of course they want to establish the cloud as a standard for the modern IT systems because it makes them “own” the internet, or be the “base of everything”. Cloud just means we take our stuff and upload it to the servers of one of those giant companies.

Of course this comes with many benefits. When running a company, we don’t have to worry about the hardware powering our servers. No need to swap hard drives when they fail. “The cloud” handles it for us. We can easily scale our application as we need. But this all comes at the cost of becoming dependent on one or two big players which build a monopoly for “powering the internet”.

Result: Docker

Docker was developed in order to easily build, package and deploy software on cloud-based platforms. Software built and developed with docker can be re-built and re-deployed predictably on any other system. Gone are the days of “works on my machine” and “fails on another computer”. No need to setup build environments and other development tools anymore. They become portable with Docker.

Docker Architecture

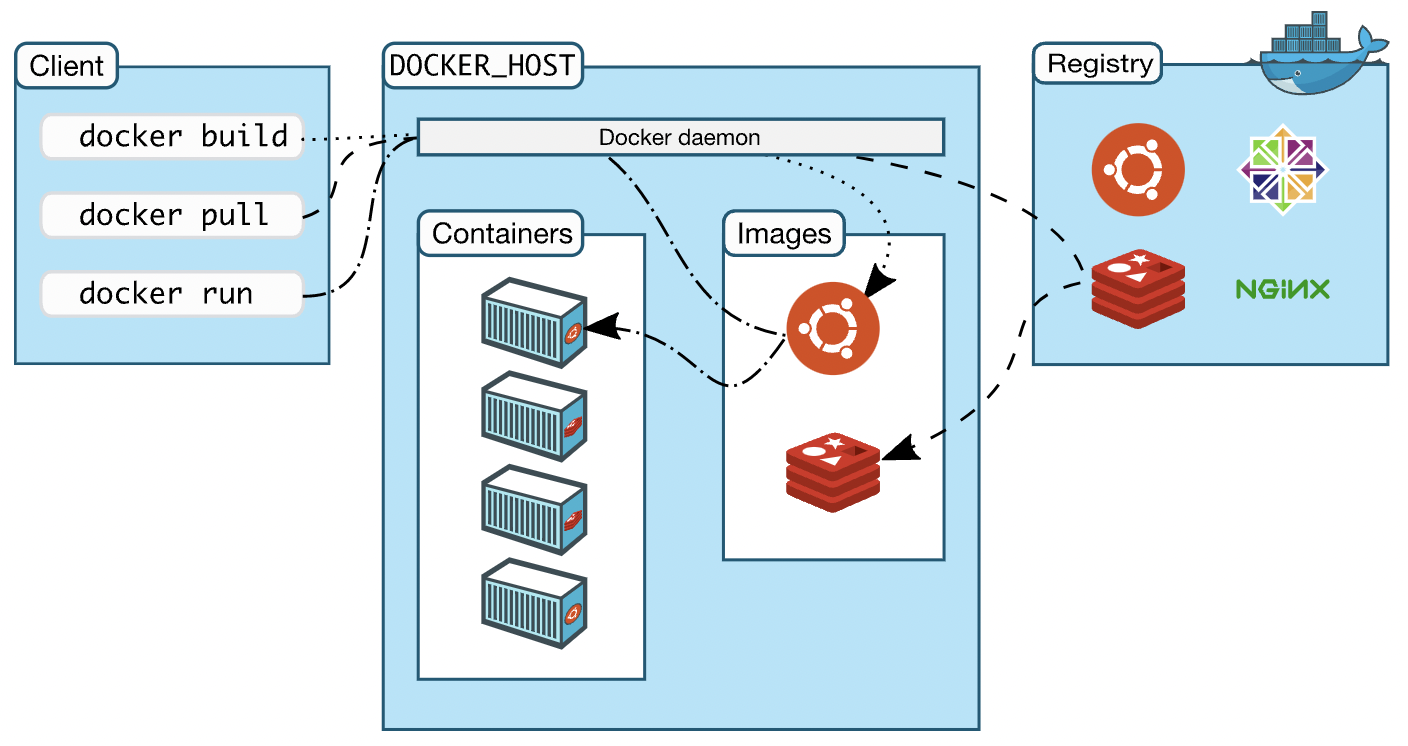

Docker is basically a system of three main components:

- Docker Client

- Docker Host

- Docker Registry

and also a concept named “Docker Objects”, which can be

- images

- containers

- networks

- volumes

- plugins

- …

Docker Client

The docker client is a piece of software that can be installed on a computer. It provides a command line interface which can be used to communicate with a docker daemon (“dockerd”). An example command which will run the latest docker image of node.js:

docker run node:latestDocker Host

The docker host usually runs on the same system as the client when developing for personal use. But it can also run on another system. It provides the docker daemon runs a server listening for commands coming in over the API. It keeps track of downloaded images and other created objects (e.g. networks and which containers belong to which network). It also takes care of communicating with a docker registry when an image has to be pulled or pushed.

Docker Registry and Images

To understand what a docker registry is, we first need to understand the core concept of Docker: the image. Images are built using Dockerfiles containing a number of steps to be processed during the build of an image. An image is made up of multiple layers. Each step in the Dockerfile results in a new layer added to the image.

Once an image is ready, it can be pushed to a docker registry using the docker push command. The registry stores the images and keeps multiple versions of an image. Docker Hub is the most well-known image registry.

Further Reading

If you want to learn more details about Docker and the inner components, you can check out the official documentation at https://docs.docker.com/get-started/overview/.

One thought on “What is Docker? How does it work?”